Context Analysis — Part 1

Why is context important in NLP?

The ability for humans to understand each other, both explicitly and implicitly gives an idea of the ultimate level of social ability to aspire for in relation to a chat bot. I’m not talking merely about how the context within a sentence can alter the meaning of a word, though that is also important to consider. I’m talking about how sentences and phrases contain ambiguities that require contextual understanding to decipher, and lack of thereof only constrains understanding to a limited scope. After all, when we ask follow up question we, as beings that avoid repetitiveness, prefer for our fellow partner in conversation to implicitly understand what it is we refer to. So why shouldn’t we hold chat bots to these same standards, and strive to develop and manifest them?

Of course, context analysis isn’t limited to just chat bots. It’s just that it’s essentially an advanced component of NLP systems that considers a factor that is bound to make analyses, responses, and other following NLP tasks more ‘correct’ and thus more desirable.

Lexalytics has another viewpoint about what contextual analysis has to offer:

“The goal of natural language processing (NLP) is to find answers to four questions: Who is talking? What are they talking about? How do they feel? Why do they feel that way? This last question is a question of context.”

Right now, I am going to try to associate every one of those goals/questions with an NLP feature.

- What are they talking about ? — Named Entity Recognition / Part of Speech Tagging

- How do they feel ?— Sentiment Analysis, figuring out negative or positive connotation (a form of context)

- Who is talking ? — Coreference resolution might be the answer to this one after a little bit of googling and looking into

- Why do they feel that way ? Thematic extraction and context determination, an explanation of the why behind the results of sentiment analysis (positive or negative connotation)

Methods of Context Analysis

- N-grams

- Noun Phrase Extraction

- Themes

- Facets

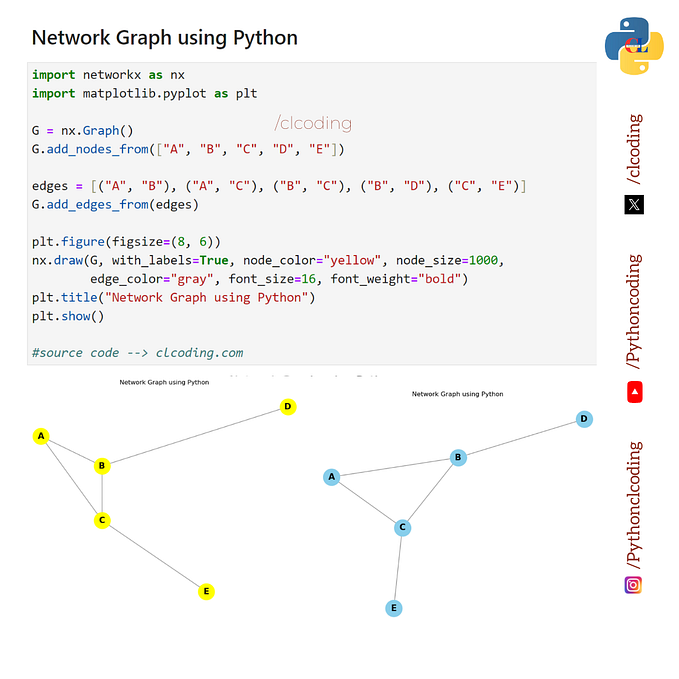

N-gram Language Model

The n in n-gram represents the size of a window in a text. N-grams exist as monograms (1 word), bigrams (2 words), trigrams (3 words), and so on. These n-grams are used in many NLP tasks. For example, suppose we are asked to complete a sentence.

“We went to Italy and _______”

The n-gram language model assumes that the best phrase to fill in the blank above can be calculated by finding the word with the highest probability of following the n-gram. The language model would search through the corpus for occurrences of the n-word and look for the word that follows it most directly. So when n = 1, the model is searching for the monogram, “and”. There are bound to be many occurences of “and”, so the word which happens to follow “and” the most is a coincedental and would most likely not fit very well within the context of the sentence. However, something very interesting happens if you increase n. As n gets larger, the model searches for, per say a bigram or a trigram. The search phrase gets more specific as it begins to resemble the sentence above, and thus the word with the highest frequency of following a large n-gram is more likely to fit within the context of the sentence.

N-grams are a way of measuring value in relationship to the degree of specificity. This aspect of the n-grams can be useful within the realm of context analysis. We have already defined n-grams to be words or phrases of a particular window size within a text.

If we iterate through a text, we can gather all the n-grams there are and analyze them. For example, while collecting monograms we obtain the 1st word, 2nd word, 3rd word, and so on until the nth word where n is the number of words in a text. While collecting bigrams, we obtain the phrases 1st & 2nd word, 2 & 3rd word, 3rd & 4th word, and so on until the (n-1)th and nth word. This process can be repeated similarly for trigrams, quadgrams, etc., etc., etc. Previously we discussed how as n-grams gets larger, so too does the specificity. This specificity provides alternate levels of thematic insight. Trigrams provide a much too narrow lens, while monograms are so open to interpretation that they have almost no meaning. Bigrams seem to be the option in the middle which aren’t too broad nor specific, which is why they capture “key phrases and themes that provide context for entity settlement” (Lexalytics).

One drawback to worry about is that a lot of n-grams are being collected, but most of them are not actually of much worth. A stopwords list is essential to cutting down the list of n-grams that actually contribute to the essence of a text. In particular, when dealing with context analysis using n-grams the stop words list must be extremely expansive.

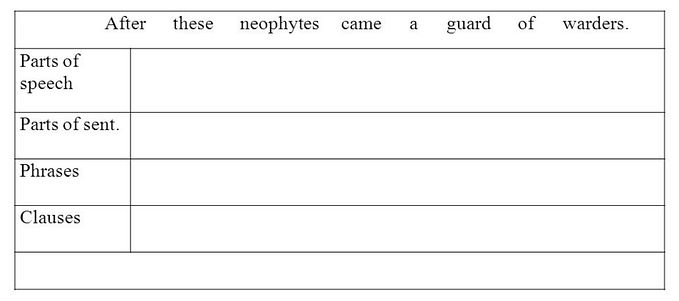

Noun Phrase Extraction

Noun phrase extraction assumes that n-grams are valuable in context analysis if they involve nouns. Nouns are very valuable because they are either subjects or objects in grammar. Thus, they represent entities that are substantial and of importance.

Part-of-speech tagging is important because the surrounding words can indicate stance and perspective of the nouns or towards the nouns. Part-of-speech tagging labels words surrounding nouns in phrases. In doing so, verbs and adjective can become very useful because they indicate the relationship between nouns, thereby indicating the relationship between entities.

Noun phrase extraction uses patterns of part of speech to identify phrases of valuable context. Stop words consist primarily of parts of speech that irrelevant or lack meaning. In doing so, noun phrase extraction is able to easily identify what is being discussed and with some effort the stance towards the main subject of the text.

However, a clear draw back is that there is no way to emphasize the value of one noun over another. It is quite clear noun phrase extraction can be effective in context analysis, but ascertaining which is most central to a text is a problem that cannot be solved by this method.

I will discuss themes and facets within my next article.

Sources and Other Interesting Reading Material

https://www.lexalytics.com/blog/context-analysis-nlp/

https://www.amazon.science/publications/context-analysis-for-pre-trained-masked-language-models